The videos below show the 16× upsampling result of our method (right) when applied to the low-resolution input (left). The videos are synchronized, such that the same frame is shown at the same time. We provide an interactive zoom lens to better appreciate the differences between low-res input and our output. The zoomed in views are enlarged by a factor of 2.5, showing the output at approximately the native video resolution (1024×1024px).

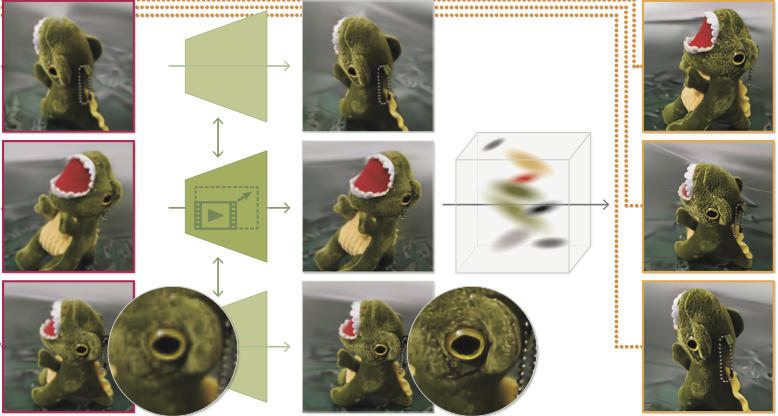

Given an input low-res 3D representation, which can be in various formats, we first sample a smooth camera trajectory and render an intermediate low-resolution video. We first upsample this video using existing video upsamplers and obtain a higher resolution 3D representation that has sharper and more vivid details. Next, we perform 3D optimization to improve geometric and texture details. Our method, SuperGaussian, produces a final 3D representation in the form of high-resolution Gaussian Splats.

Low-res input

Trajectory sampling

Video Upsampling

Reconstruction